Photo by Floarian Klauer on Unplash

Table of Contents

- Intro

- Integration Testing

- Asynchronous Container Creation

- Bootstrap Container

- Decorators

- Migrating a Codebase to Use DI

- Further Reading

Intro

In the first post of this series, we talked about what dependency injection is, why it is useful, and how to implement it.

In this article, we’ll present additional techniques surrounding dependency injection that will allow us to deal with many real-world challenges that arise when using DI.

Catching Problems Early With Eager Instantiation

When we’re using manual dependency injection, we create all dependencies ahead of time, before we need them, that is, dependencies are instantiated eagerly.

// container.ts

// We're creating all our dependencies

// at once, before the application's entry

// point

const depA = makeDepA();

const depB = makeDepB({

depA,

});

const depC = makeDepC({

depB,

});

export const container = {

depA,

depB,

depC,

};

Since we’re creating them eagerly, we can’t forget to create dependencies used by other services:

// container.ts

const depB = makeDepB({

// Oops, we forgot to create this one,

// but the compiler will warn us!

depA,

});

const depC = makeDepC({

depB,

});

export const container = {

depB,

depC,

};

Also, many things can go wrong when resolving dependencies. An exception may happen during the creation process, or maybe we have untreated cyclic dependencies, or any problem when building our container. Whatever happens, we’ll know immediately, when trying to run the application.

That means we only will be able to start the application if the dependencies have been created correctly.

By contrast, most automatic DI containers use a different approach, where dependencies are created only when needed, that is, dependencies are instantiated lazily.

One of the reasons behind this approach is to decrease the application’s startup time, which depending on how many dependencies you have and how taxing it is to create them, makes a significant difference, especially for tests.

Creating dependencies lazily has the caveat that if we have some flaw in creating some dependency, this flaw will only present itself when we reach some part of the application that uses this dependency.

For example:

// container.ts

//...

export const dependenciesResolvers = {

// depA resolver is missing!

depB: asFunction(makeDepB).singleton(),

depC: asFunction(makeDepC).singleton(),

//...

};

//...

In the situation depicted above, we forgot to register the depA resolver, which is used by depB. However, Awilix resolves dependencies lazily, so when developing/testing we’ll only ever notice this bug if we end up using depB (or any other code that depends directly or indirectly on depA). Otherwise, we’ll miss it and it’ll blow up in production.

Trust me, this does happen and has happened to me more than once.

But there’s a remedy for that, which is to force early instantiation during development:

// container.ts

// ...

export const container =

process.env.NODE_ENV === "development"

? {

// By spreading the cradle,

// we force all dependencies to

// be constructed, so if there is

// any missing dependency (or any other issue, like

// problems with cyclic dependencies), we'll

// notice during development

...awilixContainer.cradle,

}

: awilixContainer.cradle;

And that’s it.

Another interesting complementary technique is to have an integration test that instantiates all dependencies. That way, these kinds of problems can be caught by our CI pipeline:

// container.test.ts

import { container } from "container";

describe("When all dependencies are instantiated", () => {

it("Things don't blow up", () => {

expect(() => ({ ...container })).not.toThrow();

});

});

Integration Testing

Previously, we used dependency injection to be able to write unit tests for services, and now, we’ll see that dependency injection is even more powerful for integration testing.

The line between unit, integration, and end-to-end tests is blurry, also, it seems that everybody has a different opinion on where it should be drawn. So, to keep the discussion precise and accurate we need to agree on a definition, otherwise, we’ll use the same words to mean different things.

In the scope of this post, I’ll use the following definition of unit and integration tests:

Unit tests are those where we mock all direct dependencies of whatever we consider being our unit. Whether we consider it to be a single function, a single class, or a single file, it doesn’t matter, as long as the only thing that could make these unit tests break is changing the unit itself.

In order words, any change to anything external to that unit (aside possibly from the runtime itself) should never make those unit tests break.

Everything else is considered to be an integration test. So if we don’t mock some direct dependency of the unit under test, either because we’re not mocking anything at all, or because we chose to mock some indirect dependency instead, we’ll consider that to be an integration test.

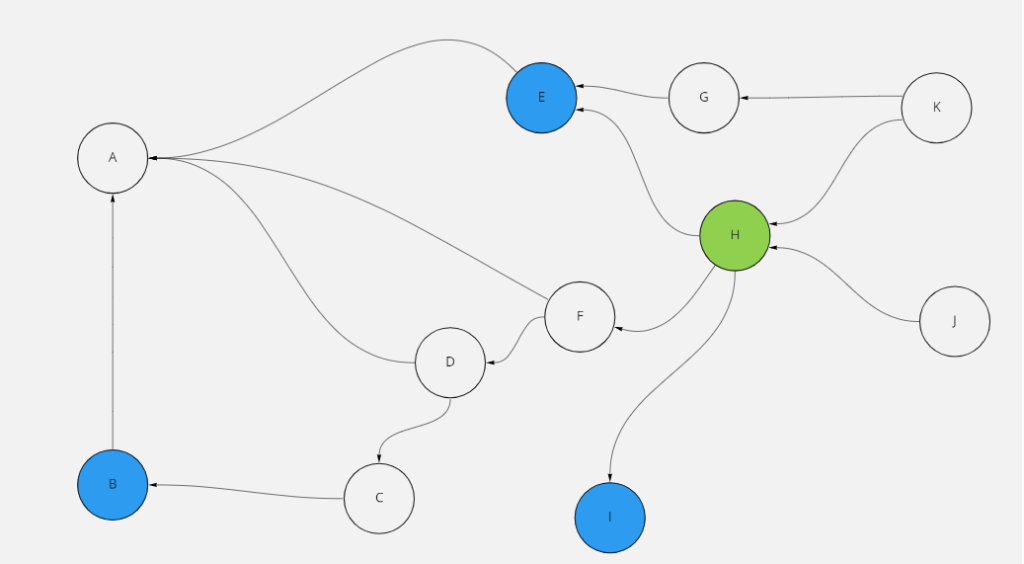

Take a look at the following graph:

The picture above depicts a dependency graph where each node is a service, and arrows represent the “depends on” relation.

The green node represents the service (our unit) we are testing, and the blue nodes represent the services we are mocking.

Notice that in this case, all direct dependencies of the service under test are being mocked, and only them.

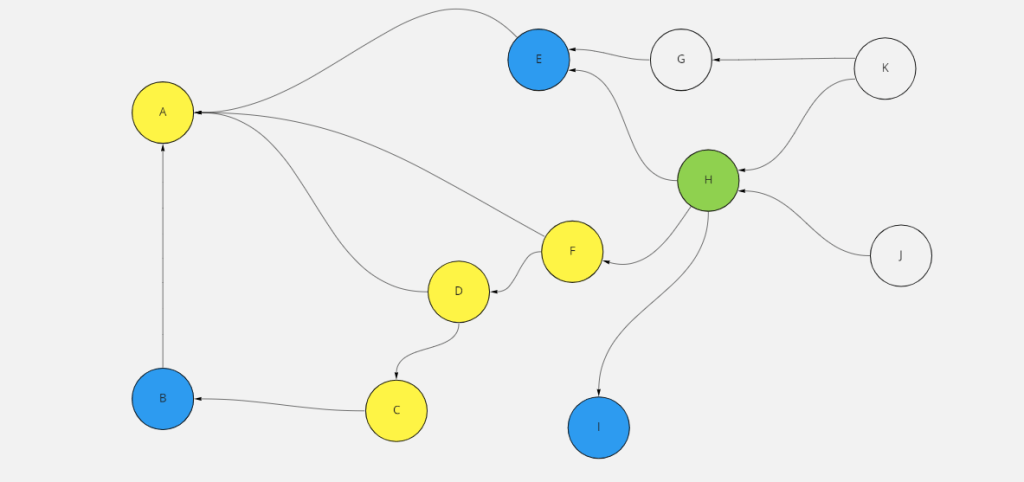

Now here’s a different scenario:

In the above picture, the service under test now is using the actual implementation for one of its dependencies, and it’s mocking some indirect dependency “down the road”.

Going back to our randomNumberList (which was introduced in the first post), suppose we wanted to test it, but instead of using a mocked implementation of randomNumber, we wanted to use the actual fastRandomNumber implementation.

In this case, we still need to mock randomGenerator, otherwise, we can’t control the numbers generated, and thus it will be impossible to test. But notice that randomGenerator is not a direct dependency of randomNumberList.

// randomNumber.ts

export type RandomNumber = (max: number) => number;

// fastRandomNumber.ts

import { RandomNumber } from "./randomNumber";

type Dependencies = {

randomGenerator: () => number;

};

export const makeFastRandomNumber =

({ randomGenerator }: Dependencies) =>

(max: number): number => {

return Math.floor(randomGenerator() * (max + 1));

};

// randomNumberList.ts

import { RandomNumber } from "./randomNumber";

type Dependencies = {

randomNumber: RandomNumber;

};

export const makeRandomNumberList =

({ randomNumber }: Dependencies) =>

(max: number, length: number): Array<number> => {

return Array(length)

.fill(null)

.map(() => randomNumber(max));

};

describe("General case", () => {

it("Generates random number list correctly", () => {

const randomGenerator = jest

.fn()

.mockReturnValueOnce(0.23)

.mockReturnValueOnce(0.11)

.mockReturnValueOnce(0.3)

.mockReturnValueOnce(0.87);

const fastRandomNumber = makeFastRandomNumber({ randomGenerator });

const randomNumberList = makeRandomNumberList({

randomNumber: fastRandomNumber,

});

const actualList = randomNumberList(10, 4);

const expectedList: Array<number> = [2, 1, 3, 9];

expect(actualList).toStrictEqual(expectedList);

});

});

One of the challenges of integration testing, when we want to mock some indirect dependency, is that we need to recreate all the services along the way until we reach the indirect dependency we want to mock:

In the above picture, yellow nodes are the dependencies we need to create, not because we are mocking them, but because they’re “in-between” the service we’re testing and the dependencies we’re mocking.

The logic is as follows:

We’re testing H, which depends on E, F and I, however, E and I are being mocked, so we don’t need to worry about their dependencies.

F, on the other hand, is not being mocked, which means we need to create it.

To create F, we need to provide it with A and D. Since they’re not mocked, we also need to create them.

A is a terminal dependency, which means it doesn’t depend on anything. So for A, nothing else needs to be created/provided.

F depends on D, which is not being mocked, so we need to create it.

D, in turn, depends on C and A. However, we already have A, so we need to create C.

C depends on B, and B is being mocked, so we finally have everything we need.

As you can see, if we proceed like this integration testing becomes very cumbersome.

Fortunately, there is a technique that makes this process of mocking just a few select dependencies much more elegant, as we’ll see below.

We’ll need a helper:

// createContainerMock.ts

import { createContainer as createContainerBuilder } from "awilix";

import { dependenciesResolvers, Container } from "./container";

import { merge } from "lodash";

// Most times we won't be providing a full-fledged

// implementation for mocked dependencies,

// so it's useful to be able to pass

// whatever we want

export type MockedDependenciesResolvers = {

[Key in keyof Container]?: unknown;

};

export const createContainerMock = (

mockedDependenciesResolvers: MockedDependenciesResolvers

) => {

const containerBuilder = createContainerBuilder<Container>();

// Pay attention to this part:

// We're using the "canonical" dependencies

// that we've registered in the "original"

// container and replacing only the dependencies

// we want to mock, so we don't need to

// create everything from scratch.

containerBuilder.register(

merge(dependenciesResolvers, mockedDependenciesResolver)

);

const container = containerBuilder.cradle;

return container;

};

Now, setting up our integration test is a breeze:

With this technique, we only need to concern ourselves with the dependencies we want to mock, for all the other ones will be created automatically by our helper function.

Alternative Technique

There’s one small caveat that this technique couples our integration tests with Awilix, but there’s a variant of this technique that is not coupled with any libraries and can even be used with manual dependency injection.

In this variant, we first wrap the container creation with a function that takes the factories we use to create dependencies as parameters:

Before:

// container.ts

import { AwilixUtils } from "./utils/awilix";

const dependenciesResolvers = {

depA: asFunction(makeDepA).singleton(),

depB:

process.env.NODE_ENV === "development"

? asValue(depBMock)

: asFunction(makeDepB).singleton(),

depC: asFunction(makeDepC).singleton(),

};

const containerBuilder = createContainerBuilder<

AwilixUtils.Container<typeof dependenciesResolvers>

>({

injectionMode: InjectionMode.PROXY,

});

containerBuilder.register(dependencies);

export const container =

process.env.NODE_ENV === "development"

? { ...containerBuilder.cradle }

: containerBuilder.cradle;

export type Container = typeof container;

After:

import { AwilixUtils } from "./utils/awilix";

export const dependenciesFactories = {

depA: makeDepA,

// For this technique to work,

// we need to have a single possible resolver

// for each dependency, so if we have to choose

// the implementation for a given service,

// this must happen here.

// So now we're wrapping depBMock with a function

depB: process.env.NODE_ENV === "development" ? () => depBMock : makeDepB,

depC: makeDepC,

};

export const createContainer = (factories: typeof dependenciesFactories) => {

const dependenciesResolvers = {

depA: asFunction(factories.depA).singleton(),

depB: asFunction(factories.depB).singleton(),

depC: asFunction(factories.depC).singleton(),

};

const containerBuilder = createContainerBuilder<

AwilixUtils.Container<typeof dependenciesResolvers>

>({

injectionMode: InjectionMode.PROXY,

});

containerBuilder.register(dependencies);

const container =

process.env.NODE_ENV === "development"

? { ...containerBuilder.cradle }

: containerBuilder.cradle;

return container;

};

export const container = createContainer(dependenciesFactories);

export type Container = typeof container;

And then we do a slight modification to our helper:

// createContainerMock.ts

import { dependenciesFactories, Container, createContainer } from "./container";

import { merge } from "lodash";

export type MockedDependenciesFactories = {

[Key in keyof Container]?: unknown;

};

export const createContainerMock = (

mockedDependenciesFactories: MockedDependenciesFactories

) => {

const mergedDependenciesFactories = merge(

dependenciesFactories,

mockedDependenciesFactories

);

const container = createContainer(mergedDependenciesFactories);

return container;

};

Our integration test will look like this:

describe("General case", () => {

it("Generates random number list correctly", () => {

const randomGenerator = jest

.fn()

.mockReturnValueOnce(0.23)

.mockReturnValueOnce(0.11)

.mockReturnValueOnce(0.3)

.mockReturnValueOnce(0.87);

const mockedDependenciesFactories: MockedDependenciesFactories = {

randomGenerator: () => randomGenerator,

};

const container = createContainerMock(mockedDependenciesFactories);

const randomNumberList = container.randomNumberList;

const actualList = randomNumberList(10, 4);

const expectedList: Array<number> = [2, 1, 3, 9];

expect(actualList).toStrictEqual(expectedList);

});

});

And if we’re using manual dependency injection:

// container.ts

export const dependenciesFactories = {

depA: makeDepA,

depB: process.env.NODE_ENV === "development" ? () => depBMock : makeDepB,

depC: makeDepC,

};

export const createContainer = (factories: typeof dependenciesFactories) => {

const depA = factories.depA();

const depB = factories.depB({

depA,

});

const depC = factories.depC({

depA,

depB,

});

return container;

};

export const container = createContainer(dependenciesFactories);

export type Container = typeof container;

There is one caveat, however, as we’re using the “canonical” dependencies, they might be affected by the configuration we’re using, like when we’re using some implementation for productive environments and another one for non-productive environments (which includes the test environment).

Eventually, we’ll see a better way to deal with these cases.

Asynchronous Container Creation

To motivate this section, imagine that we’re fetching some configuration values from an external API and that the choice of some of the implementations for our dependencies depends on these values.

Let’s say we have some feature flags that are fetched from an external API and that it alternates between two implementations of a foo function: an old implementation and a newer one.

In this case, to create the container, we need to set up the dependencies resolvers, however, these resolvers now depend on the feature flags, which must be fetched asynchronously.

The solution, then, is to turn the container creation “process” which was originally synchronous, to an asynchronous one, so that we can wait for the feature flags to be fetched:

// fetchFeatureFlags.ts

export const fetchFeatureFlags = async (): Promise<FeatureFlags> => {

//...

};

export type FeatureFlags = {

NewFoo: boolean;

};

// container.ts

import { fetchFeatureFlags } from "./fetchFeatureFlags";

import { makeFoo } from "./foo";

import { makeNewFoo } from "./newFoo";

import {

asFunction,

InjectionMode,

createContainer as createContainerBuilder,

} from "awilix";

import { AwilixUtils } from "./utils/awilix";

export const createContainer = async () => {

const featureFlags = await fetchFeatureFlags();

const dependenciesResolvers = {

foo: featureFlags.NewFoo

? asFunction(makeNewFoo).singleton()

: asFunction(factories.foo).singleton(makeFoo),

//...

};

const containerBuilder =

createContainerBuilder<

AwilixUtils<Container<typeof dependenciesResolvers>>

>();

containerBuilder.register(dependenciesResolvers);

const container =

process.env.NODE_ENV === "development"

? { ...containerBuilder.cradle }

: containerBuilder.cradle;

return container;

};

export type Container = Awaited<ReturnType<typeof createContainer>>;

And then at the application’s entry point:

// index.ts

import { createContainer } from "./container";

const main = async () => {

const container = await createContainer();

container.foo();

};

main();

The only difference is that instead of having the container ready to be imported from container.ts we now have an async function that creates the container.

Caveats

To create our container asynchronously, we only need to make two changes, one to the place where we create it, and another to the application’s entry point, where we use it, so we could say it’s a fairly straightforward process.

This approach, however, conflicts with our current solution for integration testing, as the latter depends on being able to import the “canonical” dependencies from the container file, and now they are encapsulated inside createContainer.

At first sight, it seems that we could adapt both container.ts and createContainerMock to deal with this:

//container.ts

import { fetchFeatureFlags } from "./fetchFeatureFlags";

import { makeFoo } from "./foo";

import { makeNewFoo } from "./newFoo";

import {

asFunction,

InjectionMode,

createContainer as createContainerBuilder,

} from "awilix";

import { AwilixUtils } from "./utils/awilix";

// We cannot export the resolvers themselves anymore

// because their creation now must be asynchronous.

// But at least we can export this function that creates them.

export const createDependenciesResolvers = async () => {

const featureFlags = await fetchFeatureFlags();

const dependenciesResolvers = {

foo: featureFlags.NewFoo

? asFunction(makeNewFoo).singleton()

: asFunction(makeFoo).singleton(),

//...

};

return dependenciesResolvers;

};

export const createContainer = async () => {

const dependenciesResolvers = await createDependenciesResolvers();

const containerBuilder =

createContainerBuilder<

AwilixUtils<Container<typeof dependenciesResolvers>>

>();

containerBuilder.register(dependenciesResolvers);

const container =

process.env.NODE_ENV === "development"

? { ...containerBuilder.cradle }

: containerBuilder.cradle;

return container;

};

export type Container = Awaited<ReturnType<typeof createContainer>>;

// createContainerMock.ts

import { createContainer as createContainerBuilder } from "awilix";

import { createDependenciesResolvers, Container } from "./container";

import { merge } from "lodash";

export type MockedDependenciesResolvers = {

[Key in keyof Container]?: unknown;

};

export const createContainerMock = async (

mockedDependenciesFactories: MockedDependenciesResolvers

) => {

const containerBuilder = createContainerBuilder<Container>();

// We need to create the dependencies

// resolvers asynchronously, instead

// of importing them directly

// from the container file

const dependenciesResolvers = await createDependenciesResolvers();

containerBuilder.register(

merge(dependenciesResolvers, mockedDependenciesResolver)

);

const container = containerBuilder.cradle;

return container;

};

But alas, there is a huge problem with this approach:

Notice that createDepedenciesResolvers calls fetchFeatureFlags which is the actual call to an external API.

Do we want to be hitting an external service to run any of our integration tests?

The problem here is that as fetchFeatureFlags doesn’t exist in the container (as it is called even before the container is created) we cannot mock it.

But what if it was in a container?

Bootstrap Container

Whenever the creation of the container gets somewhat complex, we can use another container to create our application container.

Let me say that again: much in the same way we use a container to create the dependencies our application uses, we can treat this container as a dependency that will be created by yet another container.

If we think of the process of creating our dependencies as some sort of “runtime build phase”, then we can think of the process of creating the container as some sort of “boot phase”.

As we’ll be talking about two containers from now on, we’ll call the container that provides our applications dependencies the application container, and the container that constructs the former, the bootstrap container.

Let’s see how this works in practice:

// container.ts

// Here we have the application's container

import { fetchFeatureFlags } from "./fetchFeatureFlags";

import { makeFoo } from "./foo";

import { makeNewFoo } from "./newFoo";

import {

asFunction,

InjectionMode,

createContainer as createContainerBuilder,

} from "awilix";

import { AwilixUtils } from "./utils/awilix";

type CreateDependenciesResolversDependencies = {

fetchFeatureFlags: typeof fetchFeatureFlags;

};

// Now we're injecting fetchFeatureFlags,

// which means that we can mock it

// in our integration tests

export const makeCreateDependenciesResolvers = async ({

fetchFeatureFlags,

}: CreateDependenciesResolversDependencies) => {

const featureFlags = await fetchFeatureFlags();

const dependenciesResolvers = {

foo: featureFlags.NewFoo

? asFunction(makeNewFoo).singleton()

: asFunction(makeFoo).singleton(),

//...

};

return dependenciesResolvers;

};

type CreateDependenciesResolvers = ReturnType;

type CreateContainerDependencies = {

createDependenciesResolvers: CreateDependenciesResolvers;

};

export const makeCreateContainer = async ({

createDependenciesResolvers,

}: CreateContainerDependencies) => {

const dependenciesResolvers = await createDependenciesResolvers();

const containerBuilder =

createContainerBuilder<

AwilixUtils

>();

containerBuilder.register(dependenciesResolvers);

const container =

process.env.NODE_ENV === "development"

? { ...containerBuilder.cradle }

: containerBuilder.cradle;

return container;

};

type CreateContainerDependencies = ReturnType;

export type Container = Awaited;

And now we set up our bootstrap container:

// bootstrap.ts

import {

asFunction,

asValue,

createContainer as createContainerBuilder,

InjectionMode,

} from "awilix";

import {

makeCreateContainer,

makeCreateDependenciesResolvers,

} from "./container";

import { AwilixUtils } from "./utils/awilix";

import { fetchFeatureFlags } from "./fetchFeatureFlags";

export const bootstrapDependenciesResolvers = {

// Notice that the functions that are involved

// in the application container creation

// are now dependencies of the bootstrap container

fetchFeatureFlags: asValue(fetchFeatureFlags),

createDependenciesResolvers: asFunction(

makeCreateDependenciesResolvers

).singleton(),

createContainer: asFunction(makeCreateContainer).singleton(),

};

const bootstrapContainerBuilder = createContainerBuilder({

injectionMode: InjectionMode.PROXY,

});

bootstrapContainerBuilder.register(bootstrapDependenciesResolvers);

export const bootstrapContainer = bootstrapContainerBuilder.cradle;

export type BootstrapContainer = typeof bootstrapContainer;

Then, at the application’s entry point:

// index.ts

import { bootstrapContainer } from "./bootstrap";

const main = async () => {

// The bootstrap container creates the application

// container

const container = await bootstrapContainer.createContainer();

container.foo();

};

main();

Finally, we can address our integration testing needs:

// createContainerMock.ts

import { createContainer, InjectionMode } from "awilix";

import { merge } from "lodash";

import { Container } from "../container";

import {

BootstrapContainer,

bootstrapDependenciesResolvers,

} from "../bootstrap";

export type MockedBootstrapDepedenciesResolvers = {

[Key in keyof Omit]?: unknown;

};

export type MockedDependenciesResolvers = {

[Key in keyof Container]?: unknown;

};

// Now we can mock both the bootstrap container dependencies,

// and the application's dependencies.

export const createContainerMock = async (

mockedBootstrapDependenciesResolvers: MockedBootstrapDepedenciesResolvers,

mockedDependenciesResolvers: MockedDependenciesResolvers

) => {

const bootstrapContainerBuilder = createContainer({

injectionMode: InjectionMode.PROXY,

});

// Here we mock bootstrap's dependencies

// This is where we can mock API calls

// that retrieve configuration values

// that are used to choose which implementation

// we're going to use for the application's dependencies,

// for example.

bootstrapContainerBuilder.register(

merge(bootstrapDependenciesResolvers, mockedBootstrapDependenciesResolvers)

);

const bootstrapContainer = bootstrapContainerBuilder.cradle;

// Bootstrap dependencies are used to create

// application container dependencies resolvers

const dependenciesResolvers =

await bootstrapContainer.createDependenciesResolvers();

const containerBuilder = createContainer({

injectionMode: InjectionMode.PROXY,

});

// Here we mock the application's dependencies

containerBuilder.register(

merge(dependenciesResolvers, mockedDependenciesResolvers)

);

return containerBuilder.cradle;

};

Writing integration tests:

// foo.test.ts

import {

createContainerMock,

MockedBootstrapDependenciesResolvers,

MockedDependenciesResolvers,

} from "./createContainerMock";

describe("When 'New Foo' feature flag is on", () => {

it("Uses new foo implementation", async () => {

const fetchFeatureFlags = () => ({

NewFoo: true,

});

const mockedBootstrapDependenciesResolvers: MockedBootstrapDependenciesResolvers =

{

fetchFeatureFlags: asValue(fetchFeatureFlags),

};

// Suppose foo has some dependency

// we want to mock

const someFooDep = jest.fn();

const mockedDependenciesResolvers: MockedDependenciesResolvers = {

someFooDep: asValue(someFooDep),

};

const container = await createContainerMock(

mockedBootstrapDependenciesResolvers,

mockedDependenciesResolvers

);

const foo = container.foo;

// Assertions

//...

});

});

In general, having a bootstrap container is useful for integration testing when we have lots of dependencies whose implementation choice depends on a shared configuration value and we want to be able to have all these dependencies behave as if this value was some specific value, without having to mock them one by one.

For example, imagine that we have several dependencies whose implementation depends on some isServer value, that tells us whether our code is running on the server or on the browser, which is very common when using SSR/SSG:

// container.ts

// Configuration Values

const isServer = typeof window === "undefined";

export const dependenciesResolvers = {

foo1: isServer

? asFunction(makeServerFoo1).singleton()

: asFunction(makeBrowserFoo1).singleton(),

foo2: isServer

? asFunction(makeServerFoo2).singleton()

: asFunction(makeBrowserFoo2).singleton(),

foo3: isServer

? asFunction(makeServerFoo3).singleton()

: asFunction(makeBrowserFoo3).singleton(),

//...

};

//...

Suppose we want to do some integration testing and want our dependencies to behave as if we’re on the server.

Without a bootstrap container (which is the approach we used above), we’d have to mock all dependencies in our tests to make they use the server implementation:

describe("When on the server", () => {

it("bar works correctly", () => {

const mockedDependencies: MockedDependencies = {

// We need to mock one by one

foo1: asFunction(makeServerFoo1).singleton(),

foo2: asFunction(makeServerFoo2).singleton(),

foo3: asFunction(makeServerFoo3).singleton(),

};

const container = createContainerMock(mockedDependencies);

const bar = container.bar;

// Assertions...

});

});

This happens because the application container knows where configuration values are being pulled from, which makes it hard to mock them.

Through the use of a bootstrap container, we can extract these configuration values from the application container as dependencies:

// container.ts

type CreateDependenciesResolversDependencies = {

isServer: boolean;

};

// We don't care about where isServer is coming from

export const makeCreateDependenciesResolvers =

({ isServer }: CreateDependenciesResolversDependencies) =>

async () => {

return {

foo1: isServer

? asFunction(makeServerFoo1).singleton()

: asFunction(makeBrowserFoo1).singleton(),

foo2: isServer

? asFunction(makeServerFoo2).singleton()

: asFunction(makeBrowserFoo2).singleton(),

foo3: isServer

? asFunction(makeServerFoo3).singleton()

: asFunction(makeBrowserFoo3).singleton(),

//...

};

};

//...

This way we just need to mock isServer on the bootstrap container and then all corresponding implementations will be chosen correctly automatically:

describe("When on the server", () => {

it("bar works correctly", () => {

const mockedBoostrapDependenciesResolvers: MockedBootstrapDependenciesResolvers =

{

isServer: asValue(true),

};

const mockedDependenciesResolvers = {};

const container = createContainerMock(

mockedBootstrapDependenciesResolvers,

mockedDependenciesResolvers

);

const bar = container.bar;

// Assertions...

});

});

Using a bootstrap container is a very powerful technique that helps us make our application even more decoupled, on the other hand, it also adds a significant amount of complexity, so I’d suggest you start first with a single container and then, if and when the necessity arises, make use of a bootstrap container.

Decorators

Originally, the term “decorator” in software engineering refers to the decorator pattern first introduced by the GoF in their famous Design Patterns book, and its original definition is closely tied to the idea of classes/objects.

Over time, however, the meaning of this term has been extended to also encompass functions, and it’s with this enlarged definition that we’re going to work with here.

In the aforementioned setting, decorators are either functions or classes, that extend the behavior of other functions/classes, in such a way that the decorated function or class is a subtype (and thus can be used in the place of, think Liskov Substitution Principle) the decoratee.

I understand that this might sound a little bit vague, so let’s see some examples.

Say we have a function foo:

const foo = (a: number, b: number, c: string) => {

// Do something, it doesn't really

// matter what

return result;

};

Suppose that we now want to log calls to foo, along with the arguments and return value of each call.

One way to do that is to modify foo‘s code, by inlining calls to console.log:

const foo = (a: number, b: number, c: string) => {

console.log("Executing foo");

console.log("Args", a, b, c);

// Do something, it doesn't really

// matter what

console.log("Result", result);

return result;

};

But what if there are a bunch of other functions whose execution we want to log as well? Or what if multiple execution paths can return in the function?

const foo = (a: number, b: number, c: string) => {

console.log("Executing foo");

console.log("Args", a, b, c);

if(/* ... */) {

// ...

console.log("Result", result);

return result;

}

if(/* ... */) {

// ...

console.log("Result", result);

return result;

}

console.log("Result", result);

return result;

}

A better way to do this is to create a decorator and then wrap foo with it:

const withLogging =

(decoratee: (...args: Args) => Result) =>

(...args: Args): Result => {

console.log(Executing ${decoratee});

console.log("Args", ...args);

const result = decoratee(...args);

console.log("Result", result);

return result;

};

const fooWithLogging = withLogging(foo);

This way, we adhere to the open/closed principle, as we’re extending the behavior of our function without having to modify it, we can reuse this same logic for every other function, and it doesn’t matter how many return paths the function has.

We may also have a class decorator:

class Cat {

constructor(name: string, age: number) {

this.name = name;

this.age = age;

}

public getName(): string {

return this.name;

}

public setName(name: string): void {

this.name = name;

}

public getAge(): number {

return this.age;

}

public setAge(age: number): void {

this.age = age;

}

private name: string;

private age: number;

}

class CatWithLogging {

constructor(cat: Cat) {

this.cat = cat;

}

public getName(): string {

console.log("Executing Cat.getName");

console.log("Args");

const result = this.cat.getName();

console.log("Result", result);

return result;

}

//...

private cat: Cat;

}

This logging decorator is a little bit boring, but other interesting concerns are handled gracefully by decorators, like:

- Memoization

- Throttling

- Debouncing

- Retrying/Polling

- Timing

- Batching

- Request Deduplication

And these are generic decorators, that may be applied to a very large set of functions, but some more specific decorators are intended to be used with only a few specific functions.

Back to dependency injection, having services decoupled from the concrete implementations of the dependencies they use, makes it very convenient to decorate them, as it’s possible to do so without both the service that uses the dependency and the dependency’s implementation itself being aware of this decoration process.

In this sense, it’s a very concrete way of adhering to the open/closed principle, where we extend the functionality of our dependencies without having to modify either the dependents or the dependency.

If we’re creating our dependencies manually, it’s very easy to decorate them:

// withLogging.ts

export const withLogging =

(

decoratee: (...args: Args) => ReturnValue

) =>

(...args: Args): ReturnValue => {

const returnValue = decoratee(...args);

console.log(Executing ${decoratee}:);

console.log(Args: , ...args);

console.log(Return Value: , returnValue);

return returnValue;

};

// container.ts

const depA = withLogging(makeDepA());

const depB = makeDepB({ depA });

export const container {

depA,

depB

};

If, however, we’re using an automatic DI container, like Awilix, we need a few tweaks:

// decoratorFactoryWrapper.ts

type Decoratee = (

...args: Args

) => ReturnValue;

// This is a helper to decorate functions

// that are created via a factory, given that

// Awilix creates our dependencies automatically,

// and there's no easy way to "hook into" this creation

// process to decorate a dependency before it's passed

// to other services' factories

export const decoratorFactoryWrapper =

(

factory: (deps: Dependencies) => Decoratee,

decorator: (

decoratee: Decoratee

) => Decoratee

) =>

(deps: Dependencies) =>

decorator(factory(deps));

// Note that this helper is a decorator itself!

// It decorates the dependency factory and in doing

// so, it applies the "main" decorator to the factory's

// return value.

// Decorator-ception

// container.ts

export const dependencies = {

//...

depA: asFunction(decoratorFactoryWrapper(makeDepA, withLogging)).singleton(),

depB: asFunction(makeDepB).singleton(),

//...

};

Migrating a Codebase to Use DI

When working with an existing codebase that does not use DI, there are a few approaches we can use to gradually “convert it” to use DI.

The main difference between each approach is how “conservative” or “disrupting” it is.

More conservative approaches change the codebase in a hardly noticeable way and require very little refactoring upfront, while also making some DI benefits inaccessible at first.

On the other hand, more disrupting approaches require lots of refactoring but allow us to reap the full range of benefits associated with using DI.

We’ll start by presenting the more conservative approaches first, and then we’ll progress through the more disrupting ones.

Per-File, Containerless Dependency Injection

The easiest way to start using dependency injection in a codebase is to do the same thing we’ve shown at the start of the first post of the series, where we keep a dependency’s factory and its corresponding built service in the same file:

Before:

// createOrder.ts

import { db, isDbError } from "./db";

import { sendNotification, isNotificationError } from "./sendNotification";

import { logger } from "./logger";

import { Order } from "./order";

export type OrderDTO = {

userId: string;

productId: string;

quantity: number;

timestamp: string;

};

export const createOrder = (orderDTO: OrderDTO): Promise => {

const log = process.env.NODE_ENV === "development" ? console.log : log;

try {

const order = await db.insert("orders", orderDTO);

await sendNotification(Order ${order.id});

return order;

} catch (error) {

if (isDbError(error)) {

log(error);

return;

}

if (isNotificationError(error)) {

log(error);

return;

}

throw error;

}

};

After:

// createOrder.ts

import { db, isDbError } from "./db";

import { sendNotification, isNotificationError } from "./sendNotification";

import { logger } from "./logger";

import { Order } from "./order";

export type OrderDTO = {

userId: string;

productId: string;

quantity: number;

timestamp: string;

};

type Dependencies = {

db: typeof db;

sendNotification: typeof sendNotification;

log: (error: unknown) => void;

};

export const makeCreateOrder =

({ db, sendNotification, log }: Dependencies) =>

(orderDTO: OrderDTO): Promise => {

try {

const order = await db.insert("orders", orderDTO);

await sendNotification(Order ${order.id});

return order;

} catch (error) {

if (isDbError(error)) {

log(error);

return;

}

if (isNotificationError(error)) {

log(error);

return;

}

throw error;

}

};

// From the clients' perspective nothing has changed,

// as this file keeps on exporting a createOrder

export const createOrder = makeCreateOrder({

db,

sendNotification,

log: process.env.NODE_ENV === "development" ? console.log : logger,

});

With this approach, we can change a single file at a time and all the clients of our service can keep consuming our dependency pretty much the same way they were before, and this has a crucial social factor, for this approach introduces practically zero attrition for your team members, which in many cases is a very important thing.

This is the approach where there’s the least amount of change/refactoring involved, but as we’re not using a container things like integration testing, or changing the implementation of a service for all its consumers at once become more cumbersome.

Introducing the Container in a Top-Down Manner

In this second approach, we move the dependencies instantiation to an actual container, and this may either be used as the default approach from the very beginning of the migration process or, may be used as a second step from the first approach, once you already have a significant part of the codebase migrated using the first approach.

The key idea of this approach is to migrate dependencies in a top-down manner, that is, we first start with the dependencies that get called at the application’s entry point and then move forward to the lower-level dependencies.

So, let’s say our application’s entry point looks like this:

// main.ts

import { run } from "./run";

const main = () => {

run(process.argc, process.argv);

};

main();

The first function to be called (aside from main) is the run function, so this is the first thing we’ll put in our container:

// container.ts

import { makeRun } from "./run";

import { depA } from "./depA";

import { depB } from "./depB";

import { depC } from "./depC";

const run = makeRun({

depA,

depB,

depC,

});

export const container = {

run,

};

// main.ts

import container from "./container";

const main = () => {

container.run(process.argc, process.argv);

};

main();

By using this top-down approach, we never need to update the consumers of the dependencies we’re applying DI to because, by the time we apply DI to some dependency, all of its consumers are already in the container, and thus we’ll never have to import anything from the container (aside, obviously, from the application’s entry point).

This is an approach that can be used alongside the first one so that we can apply the first approach when we want to use DI for some dependencies for which we haven’t reached yet using our top-down approach.

Populating the Container At Any Point of The Dependency Graph

In this third approach, we move dependencies to the container, but we don’t necessarily do so in a top-down manner, as in the last approach.

This is what the code looks like:

// createOrder.ts

import { db, isDbError } from "./db";

import { sendNotification, isNotificationError } from "./sendNotification";

import { logger } from "./logger";

import { Order } from "./order";

export type OrderDTO = {

userId: string;

productId: string;

quantity: number;

timestamp: string;

};

type Dependencies = {

db: typeof db;

sendNotification: typeof sendNotification;

log: (error: unknown) => void;

};

export const makeCreateOrder =

({ db, sendNotification, log }: Dependencies) =>

(orderDTO: OrderDTO): Promise => {

try {

const order = await db.insert("orders", orderDTO);

await sendNotification(Order ${order.id});

return order;

} catch (error) {

if (isDbError(error)) {

log(error);

return;

}

if (isNotificationError(error)) {

log(error);

return;

}

throw error;

}

};

// container.ts

import { makeCreateOrder } from "./createOrder";

import { db } from "./db";

import { sendNotification } from "./sendNotification";

import { logger } from "./logger";

// We're using a manual container here

// to simplify the example, but you could

// very well start with an automatic container already.

const createOrder = makeCreateOrder({

db,

sendNotification,

log: process.env.NODE_ENV === "development" ? console.log : logger,

});

export const container = {

createOrder,

};

In this approach, we do not only have to change the service to which we’re applying DI, but also we have to update all files that import this service because now they need to import it from the container.

In the first post, we said that no one should import the container, but this is only valid as an end goal, or if we’re using DI from the very beginning of our project, otherwise, while we’re migrating things, we’ll have to live with the fact

that things will import the container until we apply DI to them as well.

One very important thing we must observe is that no file may import the container and at the same time be imported by it because this causes a circular import issue.

As a consequence, we must always apply DI to a file as a whole, and never partially, so that it either is imported by the container, or imports some stuff from it, but never both.

Final Considerations

In this post, we talked about some additional techniques surrounding dependency injection.

Always keep in mind to apply these techniques judiciously, as they may be useful in some cases, but they may also be overkill in others.

In the next post, we’ll talk about keeping the codebase modular while also using dependency injection.

Further Reading

Dependency Injection Principles, Practices and Patterns

Dependency Injection in NodeJS – by Rising Stack

Dependency Injection in NodeJS – by Jeff Hansen (Awilix Author)

Dependency Injection Vantagens, Desafios e Approaches – by Talysson Oliveira (Portuguese)

Six approaches to dependency injection – by Scott Wlaschin

We want to work with you. Check out our "What We Do" section!